Downside at AI. Part #2

Red Flags in AI: Satya’s Take, Nvidia’s Margins & the Custom Silicon Threat.

In Part 1 of Downside at AI (from last October) we discussed investing in the AI theme in a top-down manner — and the misunderstandings around it.

I illustrated the Techno-AI Boom/Bust cycle using Soros theory and terminology, concluding with the flaw in the process: “How much value does AI really create?”

On February 19th, Satya Nadella practically raised the same question in the interview he gave on the Dwarkesh Podcast — the indices peaked and sold off since that day.

Satya’s responses raised some red flags. We will discuss them in this post as well as:

The DeepSeek event

Microsoft distancing itself from Open AI

Commoditisation of AI, across the board

Where is the value creation?

Nvidia margin compression

Custom Silicon and the ASIC threat to GPUs

Let’s start chronologically…

In late January of this year, the DeepSeek AI chatbot was released, powered by the DeepSeek R1 model.

The DeepSeek precedent shook markets, and specifically the Semis/AI theme — by proving it could match the performance of other AI Chatbots like Chat GPT, while using significantly fewer resources.

The response was that DeepSeek was lying, cheating, stealing or all of the above. I can’t know what the absolute truth is, so I won’t bother trying to figure it out. We aren’t trying to shoot for certainties and absolute truths here — we are always decision-making under uncertainty.

This is what I wrote at the time.

EXCERPT from Is DeepSeek the needle that pins the bubble? 📌

However, the DeepSeek event has made a number of expectations a REALITY — and that reality sets in motion other events and raises certain questions.

My thoughts in bullets:

You cannot ban and sanction your way to AI Supremacy, a concept I have been saying does not really exist. The more you push the Chinese (or others), the more they would react. We knew this, here.

We see now that the development of LLMs is not an extremely-high-cost esoteric process only for a handful of companies that can muster the required resources. It is now basically democratised and available to entities with much much fewer resources.

This throws a monkey wrench in the BIG IDEA of hoarding GPUs and buying as many as possible, to maintain a supremacy and lead in the AI Race.

Will this cause Big Tech (i.e. the big spenders) to pull back just a tad bit in their capex spending? All those Data Centers that they have been getting grilled on? All that capex which hasn’t really had an ROI?

What will happen to NVDA’s 80% Gross Margins that we’ve been talking about, anon?

Will the whole Semis/AI complex now become a price TAKER rather than a price SETTER? Oof, this is a big one. This is the money right here…

Will big AI-related projects “pause” due to a wait and see? This is highly probable.

END OF EXCERPT

Back to Satya’s red flags now 🚩🚩🚩

Satya in this part of the interview touches on the need to build the infrastructure to serve those compute needs, but then avoids the question regarding AI growth for Microsoft and where it will come from.

“The buyers will not tolerate winner-take-all”… Structurally hyperscale will never be winner-take-all.

“That I think will also happen even in the model side.”

Instead of tackling the question and laying out his vision, he started talking about GDP growth of 10% and comparing AI to the industrial revolution.

As an economist, “We have a real growth challenge.”

“The big winners here are not going to be tech companies, the winners are going to be the broader industry that uses this commodity that by the way is abundant.”

“And if suddenly productivity goes up and the economy grows faster — when that happens we will be fine as an industry, that’s the moment.”

“Us self claiming some AGI milestone that’s just nonsensiscal benchmark hacking”…. “the real milestone is, Is the world growing at 10%?”

Demand/Supply mismatches — but for how long? “Let me build it and they will come…after all we’ve done that we’ve take enough risk, but at some point..the supply and demand have to match.”

Minute 21:40: “There will be overbuild… The memo has gone out that you need more energy and more compute.. in fact it’s not just companies deploying, countries are going to deploy.”

“I am thrilled to be leasing a lot of capacity because I am looking at the builds in ‘27 and ‘28 and this is fantastic.”

“The only thing that’s going to happen with all the compute build is — prices are going to come down.”

“At scale, nothing is commodity.”

What is the implication of Satya’s remarks?

I think Satya here is confessing that data centres/compute/hyperscalers are doomed to become a commodity. He did say, “At scale, nothing is commodity” — but I am not sure even he understood what he said there.

Firstly, this is not only about AI workloads — this holds true about any workload going through the Cloud.

Satya gave historical examples that in times of massive change (like now), you have to get the tech trend right to survive — but also the business model.

So, are data centres the correct business for this massive shift? Is it the LLM? Or is it applications than run on that infrastructure?

“I am thrilled to be leasing a lot of capacity because I am looking at the builds in ‘27 and ‘28 and this is fantastic.”

By saying this, he corroborates that the value add is not at the compute capacity side because you don’t have to be Microsoft or Amazon to build it — and if Microsoft is leasing 3rd party capacity, then by definition others can build it.

“The only thing that’s going to happen with all the compute build is — prices are going to come down.”

Blatant admission that compute cost has only one way to go, and that’s down.

“There will be overbuild… The memo has gone out that you need more energy and more compute.. in fact it’s not just companies deploying, countries are going to deploy.”

Re-confirming the overbuild angle with the above. So where is the competitive advantage?

“Let me build it and they will come…after all we’ve done that, we’ve take enough risk, but at some point..the supply and demand have to match.”

Does this imply that the space is already close to overbuild? Maybe.

“The buyers will not tolerate winner-take-all”… Structurally hyperscale will never be winner-take-all.

“That I think will also happen even in the model side.”

Satya here is explaining that hyperscale will never be dominated by 1 (or a few) players because of the dynamics in the space. I think buyers understand the power dynamics here and make sure to diversify by leasing from several providers at the same time.

—> Then he says that the same will happen “even in the model side”.

i.e. That LLMs will not be winner-takes-all either. Does that mean they will be commoditised too? Maybe.

Things don’t have to be binary or absolute, they can exist on a spectrum. The point here is no one will dominate any market, and beyond that, competition will be fierce.

Microsoft distancing itself from Open AI?

With the announcement of the Stargate Project, Microsoft is no longer Open AI's exclusive cloud provider — with others like Softbank jumping in to fill that void.

In the past, Microsoft needed to integrate Open AI's models into its suite of products to gain lead in the Cloud/AI race — but now Microsoft is (mildly) pivoting from Open AI. (We discussed the dynamics of this in Weaponisation of AI, here)

Why could this be?

Microsoft saw that LLMs were proliferating, both open-sourced and closed-source — and that Open AI would soon become just one of many, so they didn’t need them.

Microsoft realised that the AI ecosystem is moving towards a multi-model approach and that its customers did not want to be tied to Open AI, so Microsoft customers didn’t want them.

That Open AI had consolidated too much power and was possibly losing direction with the profit/non-profit talk. Microsoft doesn’t need all that attention.

Microsoft developed its own models, like MAI. That meant the two were now antagonists rather than purely partners.

The Microsoft-Open AI arrangement was prone to antitrust issues and so it was time to solve that potential issue by retreating from Open AI and squash any chances.

And finally, that being tied exclusively to Open AI meant Microsoft had to underwrite the downside too. That is to say, extend capital to Open AI whenever they needed it, fund their operating losses etc.

With the increasing scrutiny from investors recently on AI profitability and margins (Refer to Downside at AI, Part #1), Microsoft had no such appetite.

Despite all this, Microsoft is still very much in bed with Open AI, as the recent announcement of January 21st explains

We are thrilled to continue our strategic partnership with OpenAI and to partner on Stargate. Today’s announcement is complementary to what our two companies have been working on together since 2019.

The key elements of our partnership remain in place for the duration of our contract through 2030, with our access to OpenAI’s IP, our revenue sharing arrangements and our exclusivity on OpenAI’s APIs all continuing forward – specifically:

Microsoft has rights to OpenAI IP (inclusive of model and infrastructure) for use within our products like Copilot. This means our customers have access to the best model for their needs.

The OpenAI API is exclusive to Azure, runs on Azure and is also available through the Azure OpenAI Service. This agreement means customers benefit from having access to leading models on Microsoft platforms and direct from OpenAI.

Microsoft and OpenAI have revenue sharing agreements that flow both ways, ensuring that both companies benefit from increased use of new and existing models.

Microsoft remains a major investor in OpenAI, providing funding and capacity to support their advancements and, in turn, benefiting from their growth in valuation.

In addition to this, OpenAI recently made a new, large Azure commitment that will continue to support all OpenAI products as well as training. This new agreement also includes changes to the exclusivity on new capacity, moving to a model where Microsoft has a right of first refusal (ROFR). To further support OpenAI, Microsoft has approved OpenAI’s ability to build additional capacity, primarily for research and training of models.

Commoditisation of AI, across the board

So up to now we have Satya proclaiming that compute will be abundant and cheap — and that models will take the same path of abundance and commoditisation.

We see DeepSeek operating just fine regardless of what the US did to asphyxiate the Chinese from the AI space, with the whole “AI Supremacy” slogan etc.

The question now is, where will margins and returns on investment tend to as the sector matures? Are hyperscalers reporting massive profits simply because there is still a supply/demand mismatch in the space — or because they still hold the cards, in terms of this still being a seller’s market?

—> I think this still is a seller’s market as big players like Microsoft are leasing 3rd party capacity, so why would they lower their prices on their own fleet? No reason to, business is still booming.

Where is the Value Creation?

On the valuations side, for one! 😂

People have made big money being long this. PLTR is up >10X in the past two years. NVDA hasn’t done so bad either, as the foremost picks-and-shovels play on Semis/AI.

But if returns on Data Centre capex decline as we complete the adoption phase, reflecting commodity-type / capital-intensive ROICs — then it would just be another average business.

And if the models/chatbots don’t find ways to monetise — then they will be worse than Linux! The subscription model makes the most sense to me, but I think it is still difficult to break even and create consistent value with so much competition in that space.

As I explained in this tweet, Chat GPT has 400mln users because it is free.

Chat GPT has 400mln total users and about 10mln paid ones.

Most of the paid ones use it for things that other Chatbots can/will be able to do as well. So competition will only go up, Chat GPT is not irreplaceable.

Naturally, as total users go up for AI apps like Chat GPT and others — demand for Data Centers goes up.

Question is, who is footing that bill? Because we know Chat GPT isn't profitable.

Point is, Chat GPT has 400mln users because it's FREE. That being said, is Chat GPT a necessity for the millions using it every day? Or is it just a convenience? How many of those 400 and growing millions will eventually pay for Chat GPT?

There is no doubt that AI Chatbots have been an amazing innovation and productivity booster, but can they create value in the land of capital? Would you bet on it?

So Philo, where is the value creation?

PICKS & SHOVELS

Nvidia has generated over $100bln in cumulative EBIT over the past two financial years.

It’s obvious that NVDA has been the quintessential AI play up to now, but the waters are getting blurry..

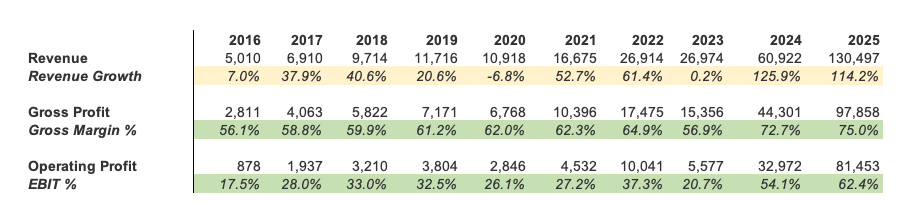

Note that Nvidia’s financial year does not end on December 31st, but on the last Sunday each January… So FY2024 is basically FY2023.

2023 (FY2024) was the first year of explosive AI-related growth, with a 126% jump in revenue and a 6X jump in EBIT! I note that gross margins expanded to a massive 73% from a 57% in the previous year, aided by the jump in GPU sales.

Gross Margins expanded another 3% the next year (FY2025) — and due to another doubling of revenues achieved $82bln in EBIT.

But things seem to be changing under the surface…

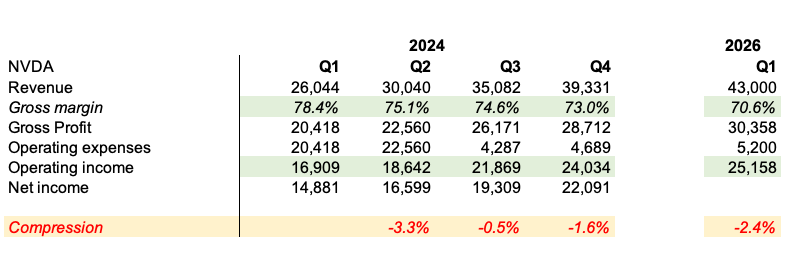

The stock sold off after Q4 earning late last month for I believe one reason, margins.

Notice from the table above the q/q progress of Nvidia’s business — the compression row shows the reduction in gross margin. For the next quarter guidance is for another 240bps drop — that means EBIT stays ~steady even with a projected 9% increase in Sales.

The market could soon ask, “If your gross margins are compressing now, what will happen in a downturn?”

Note that NVDA is selling for $2.9trln as of now, with last year’s earnings at $70bln — 41X earnings.

If there isn’t major growth on the bottom line next year, should it keep that multiple?

The Rise of Custom Silicon

Custom Silicon and technologies that antagonise the dominance of GPUs in the AI game mandate a whole piece of their own, so I will not compress my work just to make it fit in this one.

I will be back with my thoughts on this theme, as soon as possible.

Sincerely,

Philo 🦉

Is it going from Mag 7 to Lag7?